After finally ripping out the old leaderboard and metrics system, it’s time to build a new one!

I’d technically already completed the first significant chunk of the new system months before, described in the Ultimate Roguelike Morgue File series. In particular the “Preparation” section in Part 1 describes how I created an external file to store all the scoreTypes and their associated parameters. This will again become important later in the process.

Now as much as I love the new text format for scoresheets, we’re certainly not going to be uploading text files anymore. The new online data system needs to be elegant, more compact, more secure, and easier to manage overall, not just a bunch of text files that are uploaded by players then batch downloaded and manually run through a dedicated analysis program :P

Protocol Buffers

The first step on this new route is to decide what format to store the scoresheet in. Technically Cogmind’s score data already has its own binary format, a well-organized one that I use to keep it in memory and, of course, compressed in the save file itself. And it works well for this purpose, but it’s just my own format, and therefore not widely compatible or as easily usable outside Cogmind. So we need an additional format for scoresheet data, one that will be more suitable for upload, storage, and manipulation.

Will Glynn, the networking pro helping me with the server side of this new adventure, suggested we use protocol buffers, also known as “protobufs.” Protobufs are apparently a popular solution for this sort of thing (and storing data in general!), with a number of features we’ll find useful:

- Protobufs are a compact binary format, which is important considering the sheer amount of values stored in the scoresheet for a single run--up to tens of thousands of data points and strings!

- Protobufs can be easily converted to other formats like JSON (in fact they’re kinda like binary JSON data to begin with), and be parsed by a bunch of existing libraries for analysis or other uses, not to mention the accessibility benefits of support across many languages.

- Protobufs are designed to be easily extensible while remaining backwards and forwards compatible. As long as no data identifiers are changed, we can add new data to later versions of the game and these scoresheets can be uploaded normally, even if the server doesn’t yet understand them--the ability to interpret new data can be added later if desired, and the data will still be there all the same. This makes it easier for me to put out new versions that extend the scoresheet without resetting the leaderboards, which I couldn’t do before since the analyze_scores.exe I talked about last time, used to build the leaderboards and produce stats, expects everything to remain unchanged from when a particular version was released. (This is why the leaderboards prior to Beta 9 were only ever reset with major update releases, and scoresheet updates always had to be postponed until that happened, even if minor updates could have benefited from including new entries.)

Setting up Protobufs

Protobufs it is, so let’s set it up! The docs are pretty good, and include API references and tutorials in a range of different languages, including C++ (which I’m using).

The first step is to download the library from GitHub, and in my case I had to use a slightly older version, the last to support Visual Studio 2010, since that’s what I’m still using to develop Cogmind. It includes a readme with setup instructions for both the protobuf library against which to link the game, and for “protoc,” the protobuf compiler, which is essentially used to read the game’s specifications for the protobuf content and generate a header-source pair of files for reading and writing the data from and to that format.

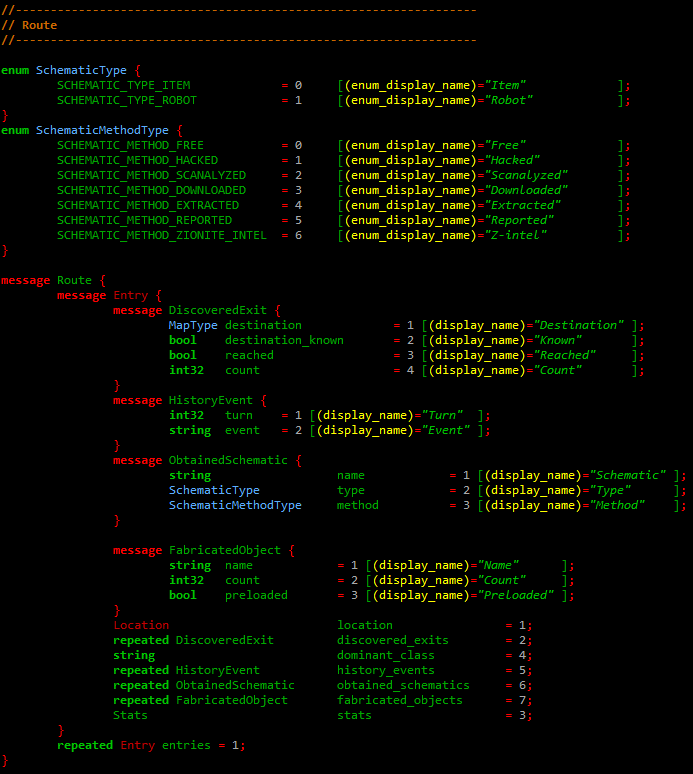

The data format is initially defined in one or more text files with the “.proto” extension, and anyone with that file can read and write a proper protobuf scoresheet. Cogmind just has the entire scoresheet data for a single run defined in one 1,811-line file.

An excerpt from Cogmind’s scoresheet.proto. Notice the ID numbers associated with each entry. Once set and used, those can’t change if you want to retain compatibility across versions, since from that point on they identify the specified data type. Protobufs support structures (“messages”), nested structures, enums, and common data types like integers, booleans, strings, and more.

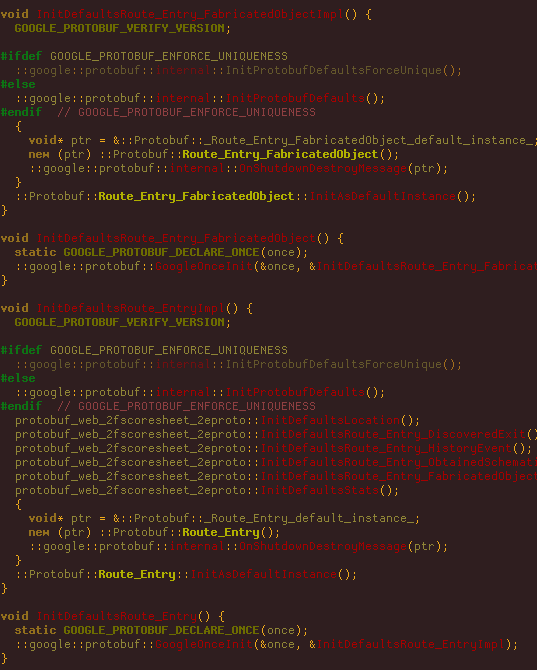

Protoc reads that file and turns it into code, altogether generating functions totaling 2.85 MB. And that’s with the .proto file specifying “option optimize_for = CODE_SIZE”! This results in lighter code at the cost of runtime performance, but Cogmind isn’t making heavy or wide use of Protobufs, just for a one-time upload of the run data, so tight performance isn’t a requirement. (Out of curiosity I compared the results, and without CODE_SIZE the protobuf files come out to 5.34 MB. Cogmind’s entire source itself is only 7.79 MB xD)

An excerpt from the 22,948-line pb.cc file generated by Protoc, corresponding in part to the .proto file section shown before.

Loading Protobufs with Game Data

We have a format, we have the code, now to put the real data in the format using that code :)

Again the docs came in handy, although there’s more than one way to do it and the tutorial doesn’t cover them all so I tested several approaches and eventually also looked around at several other websites for some use cases and inspiration before settling on a final method.

In my own projects I like to use enums for everything, making it easy to write code for loading, moving, comparing, and retrieving large amounts of data, but protobufs have a separate set of functions for every. single. piece. of data. xD

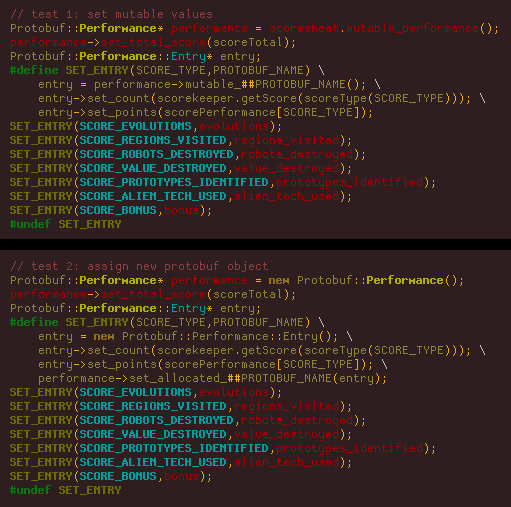

This complicates things a bit, and at first I tested the idea of using macros to set values by retrieving a pointer to mutable protobuf data, or assigning a new protobuf object, where the macros are used to call the necessary function names.

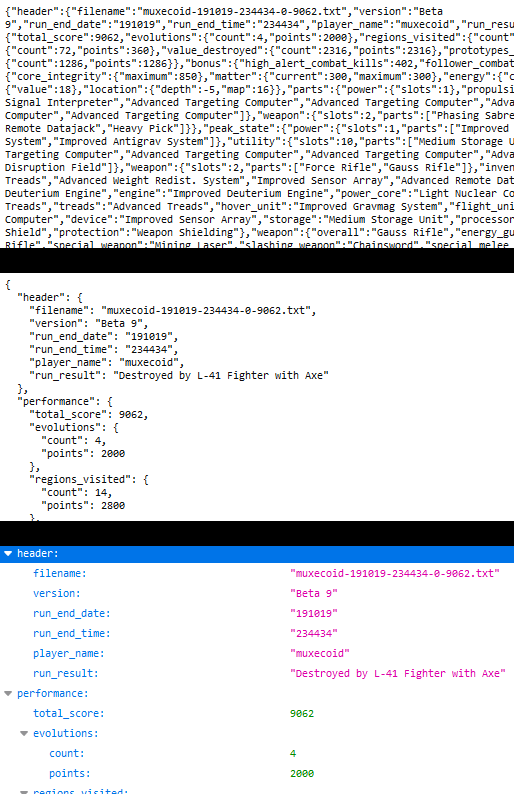

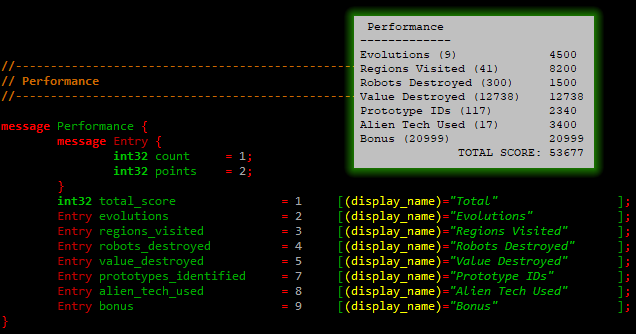

In both cases those code sections are merely populating values for the relatively simple Performance section of the Cogmind scoresheet, which you can see below.

Cogmind scoresheet.proto excerpt defining Performance data, and an inset demonstrating what that message represents in the scoresheet itself.

Looking back at the macro-powered source, either of these methods works fine if there’s a little bit of data--who cares, just get it done, right? But we’re going to need to also come up with a more efficient way to do this, one that doesn’t involve writing a ton of code for the huge amount of data needed to represent a scoresheet.

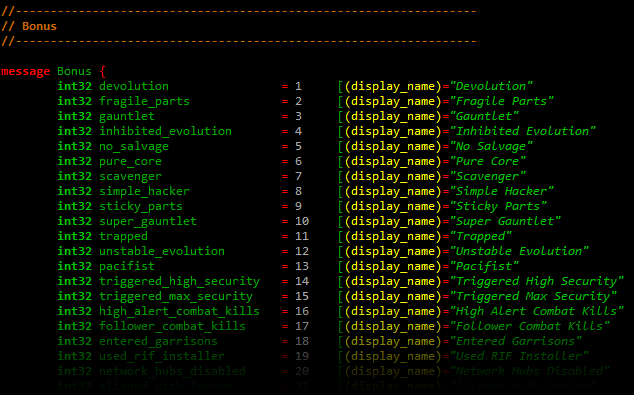

Taking the scoresheet’s Bonus section as an example, it currently contains 74 entries…

Writing out all the code to load all these, even if just one line each with the help of macros, is kinda excessive, not to mention having to update them any time new ones or added or existing ones are modified in the game. And this is just one section! What if we could just have it update automatically any time there are changes?

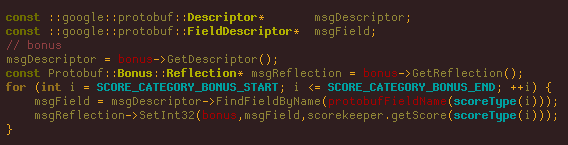

There’s a much easier way that relies on the fact that I already have both enums and strings associated with all of these entries: Reflection. It’ll be slightly slower, but again the performance aspect is negligible because loading protobufs is not something the game is generally doing.

This bit of code loads the entire Bonus section, and doesn’t need to be touched in the future even if the section is expanded with more entries:

FindFieldByName() is our friend, a protobuf method that does exactly that, and I just have to feed it the right name based on the scoreType in question, properly converted from my own string format to that required by the protobuf API via protobufFieldName().

This approach works great for the majority of scoresheet sections, but unfortunately there’s still one (rather big) roadblock: the stats section storing separate sets of data for every map.

Now technically if I stuck to having the scoresheet.proto format reflect the internal data structures, all of the stats could’ve easily been handled in the same manner, and if it was just me working on this project that’s probably how it would’ve ended up, but Will convinced me that for the online system we needed to store the run data differently than I was doing it internally.

In short, this meant the stats section is going to need… a lot of code. Fortunately all of the relevant scoresheet data is defined in the game’s external text file described in Building the Ultimate Roguelike Morgue File, Part 1: Stats and Organization, so it can be used to generate the code to convert it. Yay, more generated code xD

So it turns out that part of scoresheet.proto--the messages related to general per-map stats, is generated by a script, and the source to load it at run time is also generated.

Excerpt from the 762-line generated source file that loads all per-map stat-related messages and their submessages (and sometimes submessages of those submessages!) based on the game stats recorded at the time.

Here I should point out that all this last-minute large-scale conversion stuff could’ve been avoided if Cogmind simply used protobufs natively for all of the scoring data and stats rather than a separate format, which is what most people would probably do with protobufs. With that approach, when it comes time to upload everything is already packaged and ready.

There are a number of reasons I didn’t do that:

- For internal use I prefer a stat-first organization over what was decided would be best for the online data (route-first and embedding a lot more meaning into the format structure itself).

- It would’ve been far more work to go back and change how everything works, as opposed to just converting the existing data when necessary once at the end of a run.

- I didn’t want the protobuf library to “invade” the rest of the code, so as an optional part of the architecture it can easily be disabled or removed.

- The internal scoresheet data was originally built and maintained to create the text version of the scoresheet, so it’s more suited to that purpose than using the protobuf content as a starting point.

But anyway, that’s just me and this project. I’d still recommend protobufs as a pretty good solution to serve as the foundation for this sort of thing, or potentially even entire save files depending on the game!

Uploading via WinINet

Last time I described how for years I’d been using SDL_net to upload scoresheet files, but that’s not gonna cut it anymore. I defer to Will on networking matters where possible, and he said we need HTTPS, so we need HTTPS… Just gotta figure out how to do that xD. He listed some options to get me started, though for me it’s always a huge pain (or complete roadblock) to simply add libraries and get basic stuff actually working at all, so I wasn’t too confident in my ability to implement them.

gRPC

One seemingly particularly good option would be gRPC, since it’s been built to integrate with protobufs in the first place. Unfortunately it’s not compatible with Windows XP, which Cogmind still supports, and I think it would also require upgrading my project to VS2015 or so. While it’s possible I’ll eventually drop XP support anyway and probably one day upgrade to a newer version of VS, there’s no reason to do those things now if there’s a workable alternative, so I passed on this option.

libcurl

I was familiar with libcurl from my research years back before deciding on SDL_net, plus I’ve heard it recommended by other devs, but setting it up was nowhere near my idea of easy. Although I got pretty far in the process, it seemed that for SSL support I also needed to include OpenSSL, which is built against VS0217. Regardless, I eventually got it down to only one remaining linker error that I didn’t even understand, and ended up giving up on libcurl.

I’m also generally against adding a bunch more heavy DLLs to the game, especially for one little feature, another strike against libcurl. (Adding just protobuf support already increased the size of COGMIND.EXE by 15% xD)

WinINet

What better option than to cheat on download size and use native Windows DLLs? :P

Will couldn’t recommend WinINet, but I’m glad he listed it as a last resort because it’s right up my alley. Windows is good with backwards compatibility, so that also makes it more likely that I’d be able to use it easily despite using relatively old tech compared to so many of the other networking libraries out there. Remember, I’m not writing some persistent online application, or trying to do any kind of multiplayer networking--all I want is the occasional HTTPS data transfer, so it would seem kinda ridiculous to use anything more than the bare minimum necessary to achieve that goal.

For this option all I had to do was simply link “Wininet.lib” and start writing code! Love it :)

Well, the code part wasn’t as straightforward as I’d like. As with SDL_net, how to actually use WinINet properly took a little while to figure out, even more so since we needed SSL. The example in the docs was a helpful start, but didn’t cover everything I needed to know in order to conduct a successful transfer. I eventually came up with workable code by mixing and matching various solutions found on the net.

Of course it needs a nice C++ wrapper to simplify everything:

The header for a simple C++ class capable of handling HTTPS connections, along with a sample of how to use it (as of now that test will actually retrieve the latest Cogmind version number and news). The full header can be downloaded here, and the source here (file extension needs to be changed to .cpp--it was changed for hosting due to security reasons).

Altogether it’s less than 200 lines, and in addition to that test above, as the class was developed I ran a series of simple incremental tests until eventually reaching the point where I could encode and upload an entire scoresheet in protobuf form. (I’ll show an example of this further below when we get to crypto signing.)

Storing the Data Online

These scoresheets are uploading… to where, now? I can’t get into a lot of detail here, because much of it is currently a black box to me. The network side is Will’s area, but here are the basic steps I needed to finish hooking everything up:

- On gridsagegames.com I created a new subdomain that points to a Heroku service. This is easily done in cPanel with a simple CNAME record.

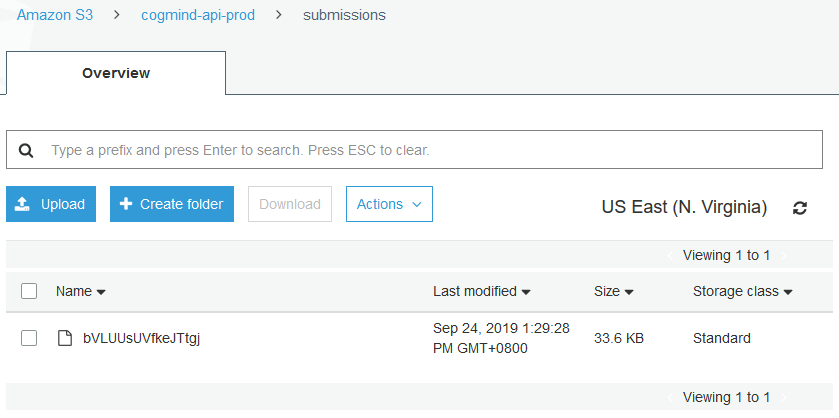

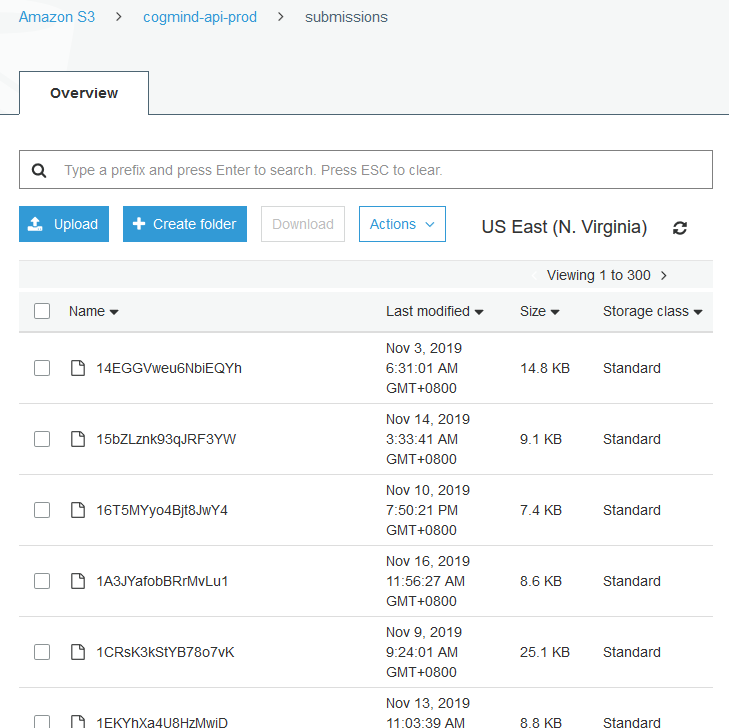

- I set up an AWS account which is where the Heroku service built by Will stores all the uploaded scoresheets for processing/reference, in an AWS S3 bucket.

For me, from there it was just a matter of using HttpsConnection to send a scoresheet and watch it appear in the bucket \o/

Although there probably won’t be any issues with the data on AWS, you never know what can happen and backups are essential, so in exploring backup options I found I can use the AWS CLI to download everything in the bucket to my local computer (instructions), from where it would also be included in my own automated offsite backups.

I had just set up a script to do that automatically every day, and of course I mention this to Will and he immediately writes a program to both do this as well as limit downloading to only new files, and zip everything up xD

Downloading Cogmind score data via AWS CLI. This was about a week after Cogmind’s first public version including the new scoresheet system.

Additional Features

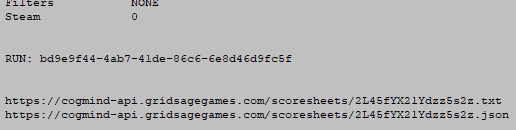

Since the leaderboards based on this new system weren’t ready when Beta 9 released, but in the meantime I wanted people to at least have a chance to see the new data as it appears online (while being able to easily share their complete run info without sending a file), I had the idea to append URLs for their run data on the server to the end of their scoresheet once it was successfully uploaded.

See a live scoresheet sample here in text format, which is identical to the local version, and the same scoresheet data here as JSON, which essentially mirrors the protobuf format.

Depending on your browser, the JSON file might appear as a barely readable mess, or if your browser knows what it’s doing and has built-in functionality for parsing them there will be formatting options for readability and/or interaction.

Reuploading

One of the features missing from the old system which I kept waiting for the new system to actually implement is how to avoid losing potential run data. What I mean are those times that the website server happens to be unreachable for some reason, or maybe the player is currently offline or their own internet connection has issues. From then on the only way that score/data could ever make it to the leaderboards/stats is if they gave me the file and I manually uploaded it, which I did do for special runs by players on request, but now that we have the real, final system, this calls for something automated.

In cases where the player has uploads activated in the options but the process can’t be carried out or fails for some reason, the complete protobuf data is archived in a temporary subdirectory in their local scores folder. Each time Cogmind starts up, it checks that directory for scores that failed to upload, and tries them again. This saves time, saves headaches, and ensures we have all of the runs that we should! Now I won’t feel nearly as bad if the service is inaccessible for some reason :)

Cryptographic Signing with libsodium

My original dumb I-don’t-kn0w-networking approach for identifying the player associated with a given scoresheet was going to be to upload that scoresheet’s data alongside their player GUID, which would not be part of the public protobuf format. As a private value, it would prevent a malicious actor from spoofing another player and messing up their historical data, and our aggregate data along with it.

Unfortunately, keeping this unique identifier private would also decrease the accuracy of third-party data analysis (which, as we’ve seen, is something a number of Cogmind players like to do!).

At Will’s suggestion, however, there’s a way to add the same extra layer of security without hiding any relevant scoresheet values: have players sign their uploads with a local private key! That enables us to safely ban specific players as necessary since it’s no longer possible for someone to pretend they’re someone else, making it easier to ensure the integrity of the leaderboards and stats.

So what does it actually mean to “sign” something… I had no idea, either, but the libsodium docs explain it all pretty well, including examples of how to do it. Super simple. libsodium is definitely my type of library! Just drop it in, write a few lines of code following their examples, and enjoy the results.

Players store their own private key locally, and the public key (more or less like a GUID, insofar as it’s a unique identifier) is included with the protobuf along with a signature created based on the private key and the data to be transferred. The online service checks that the provided public key properly matches the data signature, to confirm that the data was, indeed, uploaded by the player they say they are, and then add that data to the AWS S3 bucket. If a data transfer fails that check, it’s ignored.

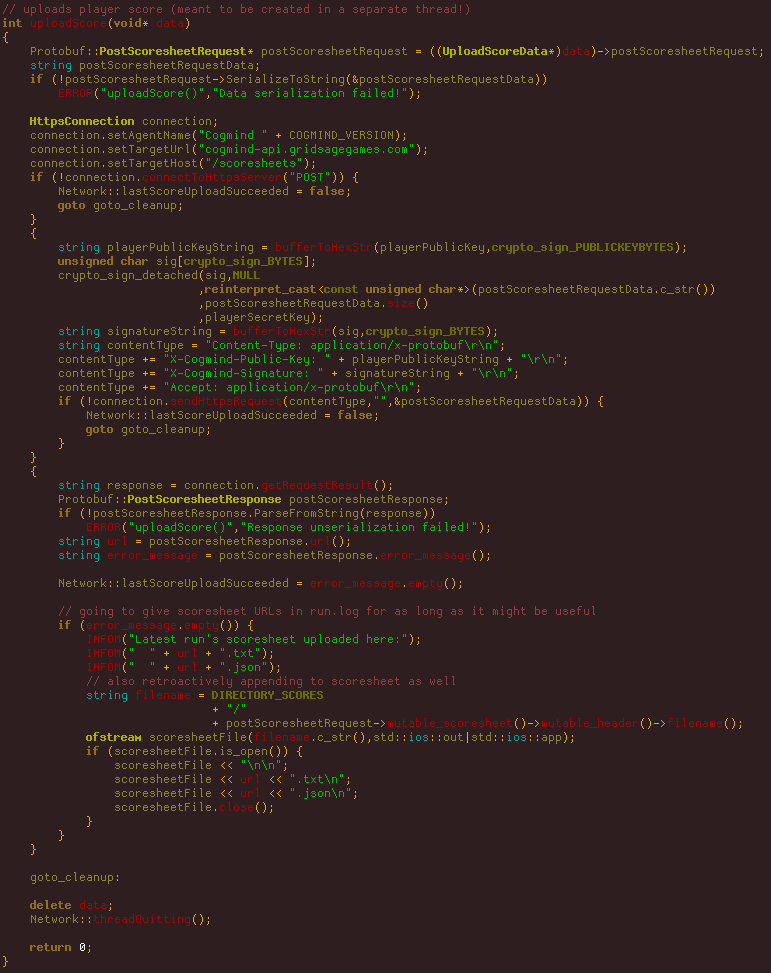

Here’s the code for using HttpsConnection from earlier to upload a signed protobuf scoresheet. It took me a while to figure out how to get the protobuf data in the right format for transfer over HTTP, so maybe this will help someone in the future:

The function for uploading a signed Cogmind scoresheet to the server, using libsodium and the WinINet wrapper from earlier..

Also note that as per the libsodium docs there are two different ways to use signing, either “combined” or “detached.” The former actually inserts the signature at the beginning of the data itself, whereas detached mode allows you to send the signature separately. The sample use case above is using the detached mode, inserting the key in the HTTP header instead of messing with the protobuf.

And there you have it, the gist of Cogmind’s new handling for network-related stat stuff! It’s been a long time since I added someone to the Cogmind credits, but I couldn’t have done this without Will, so there he is :)

The AWS web interface itself only allows us to get a glimpse of what’s there (psh, viewing 1 to 300…), but there are thousands since the Beta 9 release.

Next up is the new leaderboards based on all this data… And I’m still not sure how I’ll be doing the usual data visualization and statistical analysis now that everything is in a new format, but I’m sure it’ll work out.